Revisiting Biology:

AI-Powered Visual Classification of Mollusca

Exploring Aesthetic Patterns Through

a Non-Scientific Lens

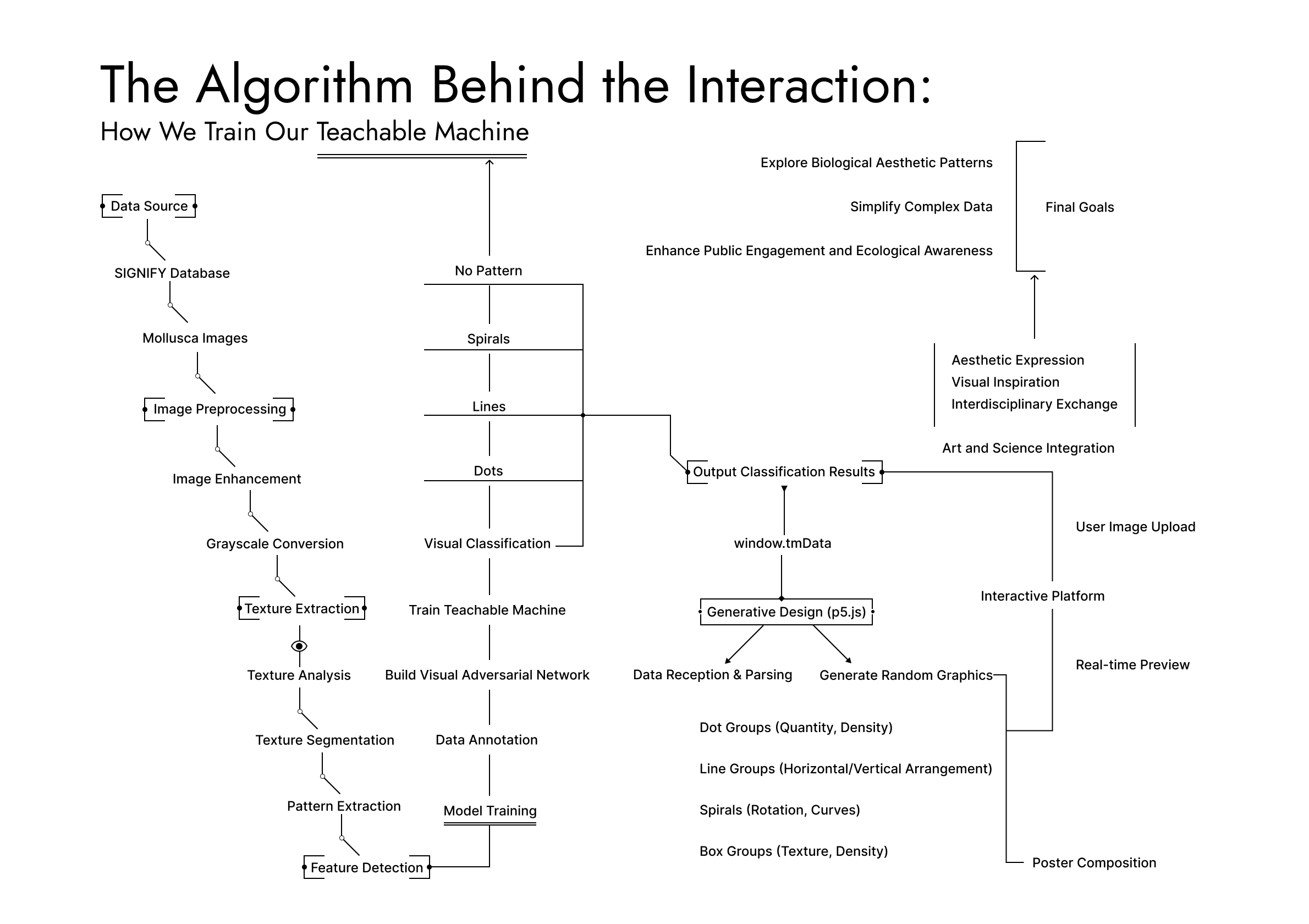

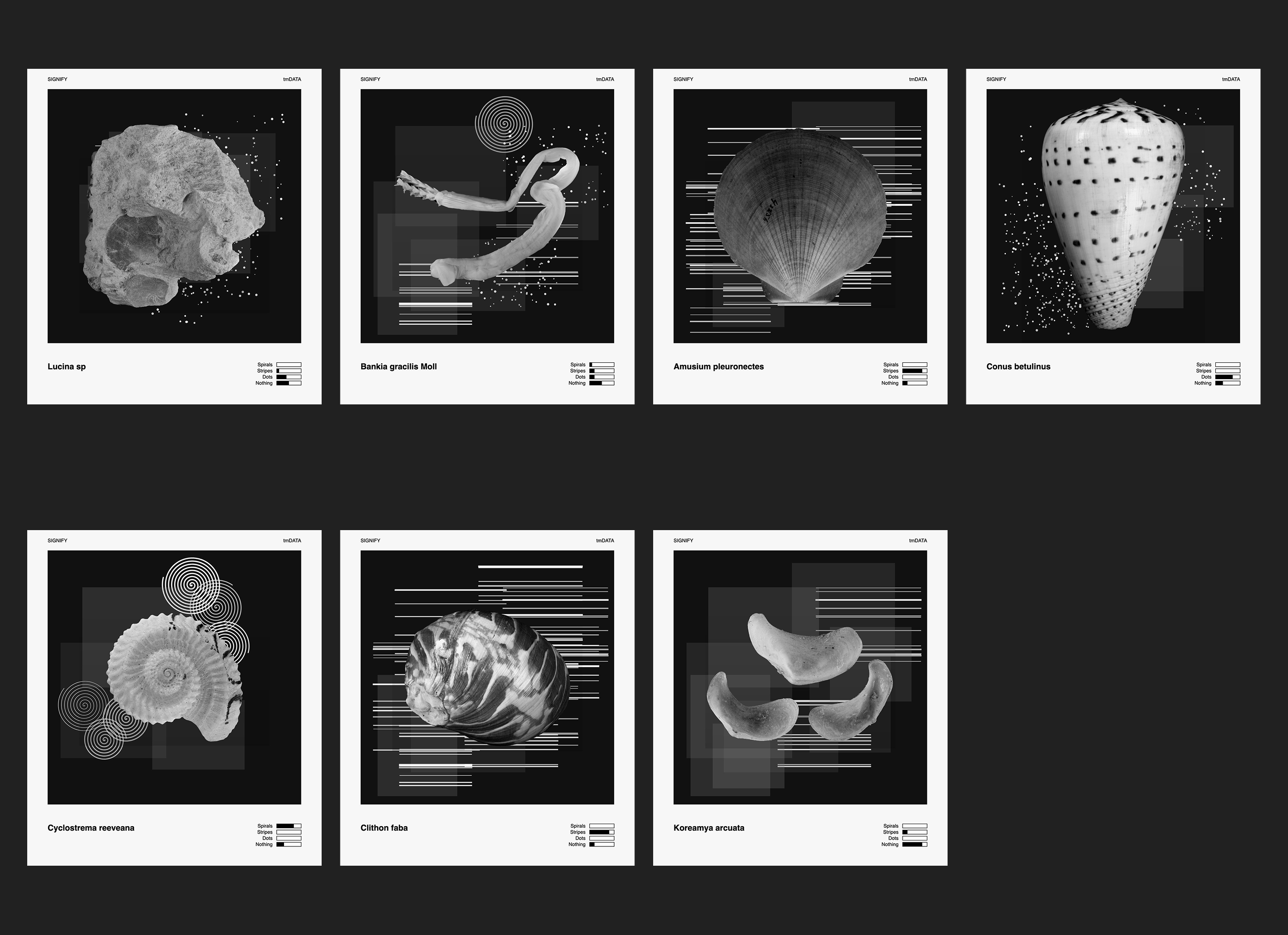

This project focuses on extracting textures from Mollusca (soft-bodied animals) in the SIGNIFY database. By training Teachable Machine, we build our own visual adversarial network algorithm to create a non-scientific visual classification method. This method does not rely on traditional scientific standards but uses visual recognition to simplify and generalize the internal textures and patterns of organisms, exploring nature’s aesthetic patterns and mysteries. The classification results from Teachable Machine are then fed into p5.js to design generative posters. By combining artificial intelligence with generative design, the project showcases the diversity of visual elements in soft-bodied animals and their potential aesthetic connections.

Mollusca Design Framework:

How We Classify Our Elements

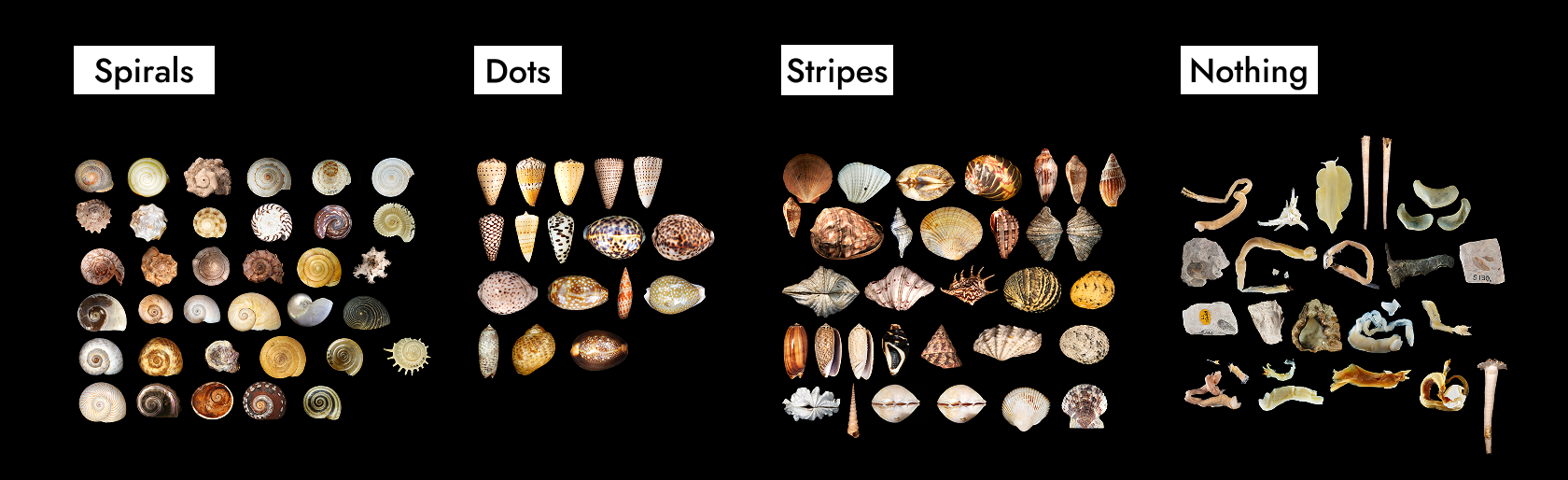

We visually classify soft-bodied animals based on the different patterns they exhibit, dividing them mainly into four categories: dots, lines, spirals, and no pattern.

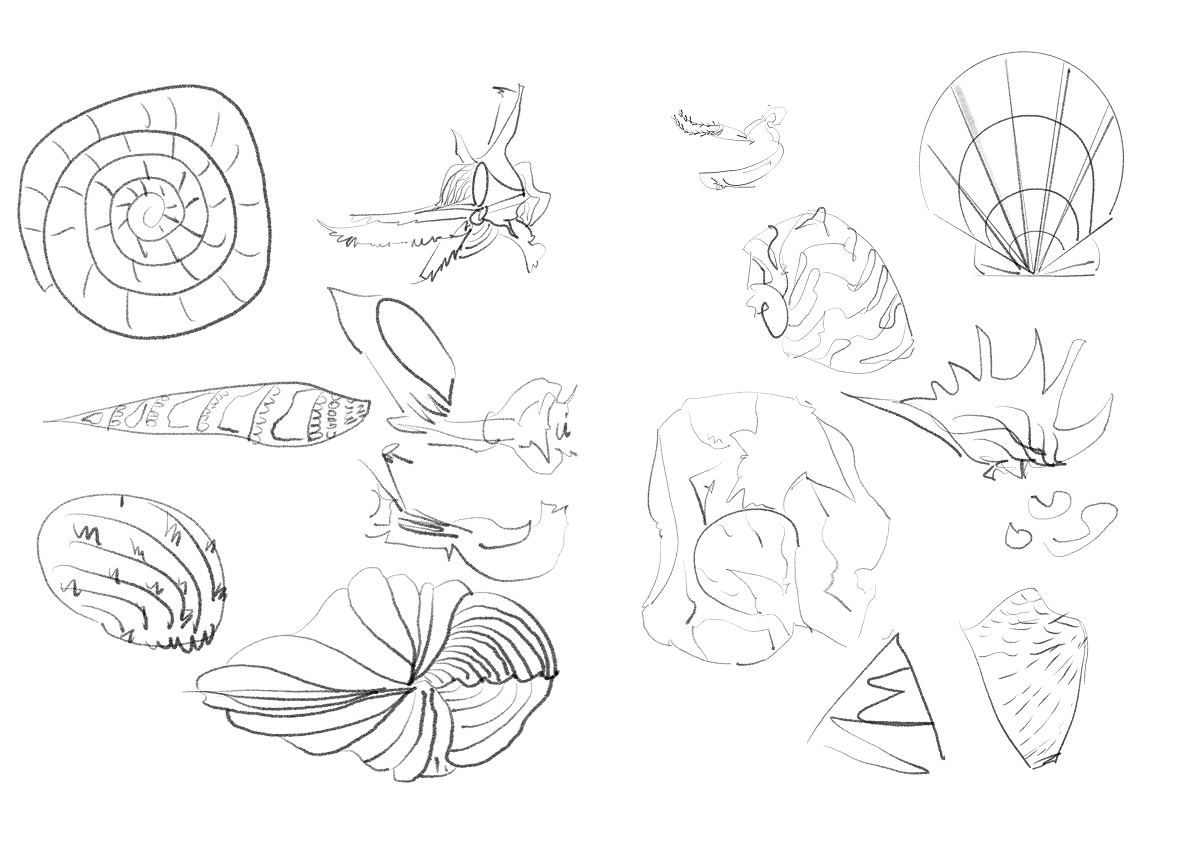

Drawings of Shells by Participants

Generative Posters of Mollusca

Based on Visual Patterns

Project Background

This project was developed as part of the LASALLE x SIGNIFY collaboration with the NUS Lee Kong Chian Natural History Museum. Our aim is to explore how computational tools can make biodiversity archives more accessible, playful, and visually engaging for everyone—bridging art, science, and technology. Special thanks to our LASALLE mentor Lim Shu Min, and Tricia JY Cho from SIGNIFY, for their invaluable guidance and for providing us with this opportunity to explore, reflect, and grow throughout the project.

LASALLE College of the Arts · 2025

Learn more about SIGNIFY